Creating a Streaming Ingestion Task in IDMC

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

Introduction

I'm Shiwani from the Data Analytics Division.

This time, i tried creating a Streaming Ingestion Task in IDMC.

A Streaming Ingestion task enables large-scale data ingestion from various streaming sources, including logs, clickstreams, social media, and IoT devices. With Mass Ingestion Streaming, you can efficiently merge or segment data from these streaming sources in real-time.

Objective

- Create a Streaming Ingestion task to real-time data from flat-file source and write it to a Kafka topic in realtime.

- Read employee data in real-time and ingest that data a streaming source for analytics purposes.

Advance Preparation

The following preparations are assumed to be ready in advance:

- IICS Secure Agent and VPC have been configured.

- A connection to the flat file using a Connector has been pre-established.

- The Kafka connection has been set up, and the topic has been created in advance. If you haven't done it yet, please refer to this article.

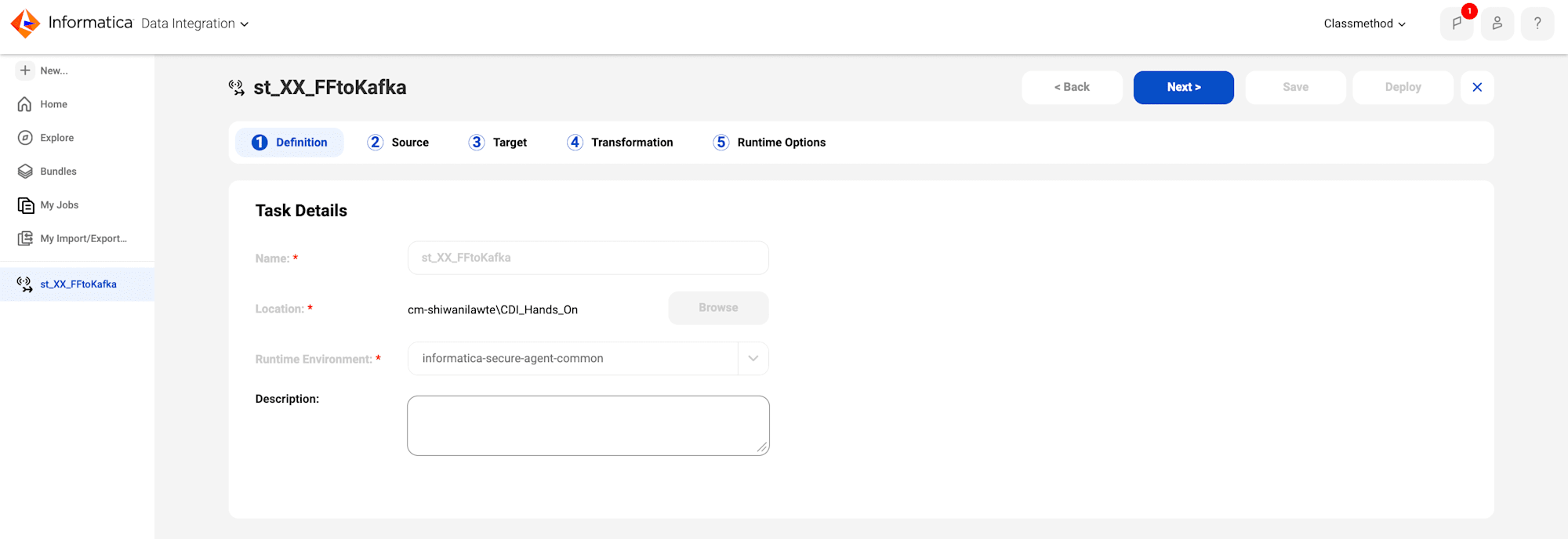

Create Streaming Ingestion Task

- From the My Services window, select Data Integration.

- In the New Asset window, select Data Ingestion and Replication, select Streaming Ingestion and Replication Task.

- In the Task Name field, enter st_XX_FFtoKafka.

- In the Runtime Environment field, select the runtime environment. here i have selected INFA-SERVER.

- Click Next.

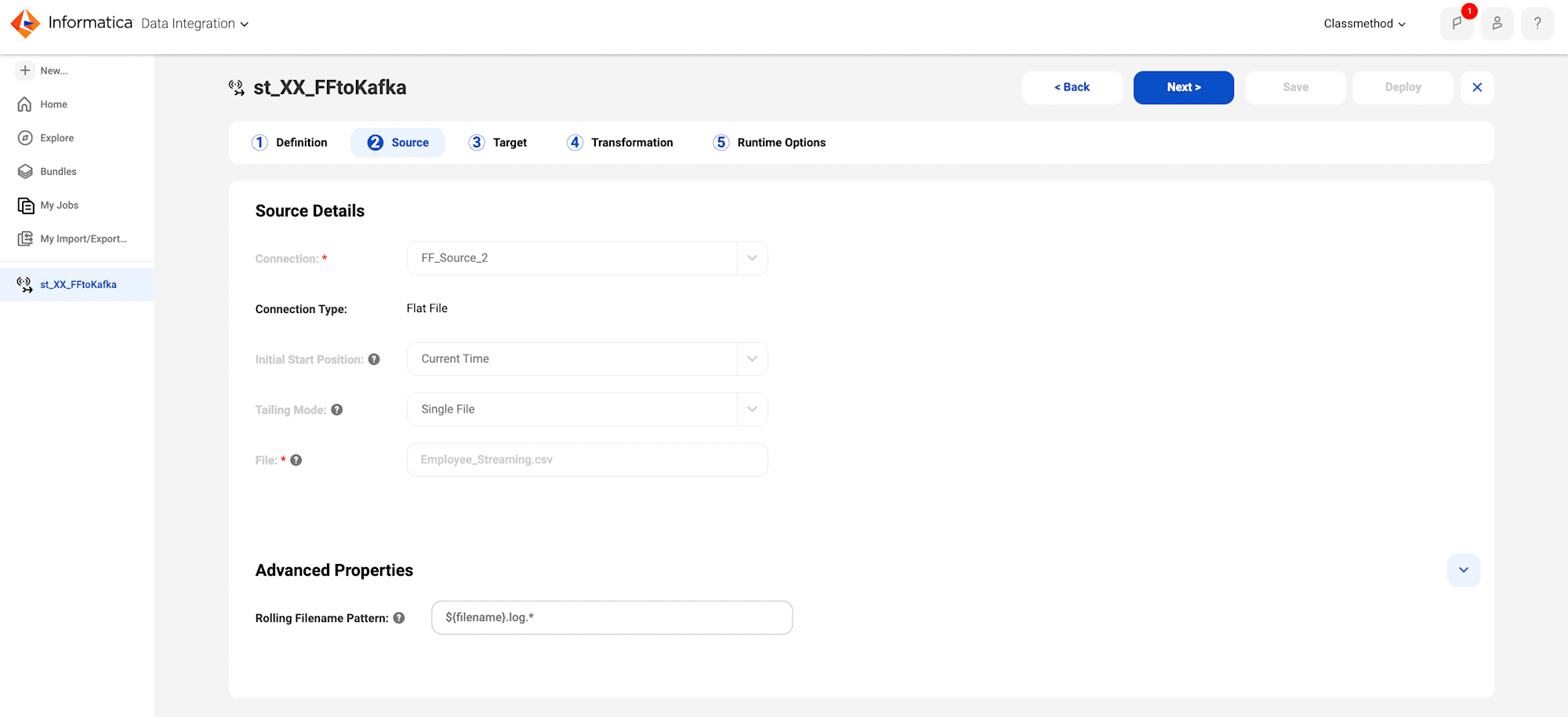

- From the Connection drop-down, select the flat file connection. here i have selected FF_Source_2.

- In the File field, enter Employee_Streaming.csv.

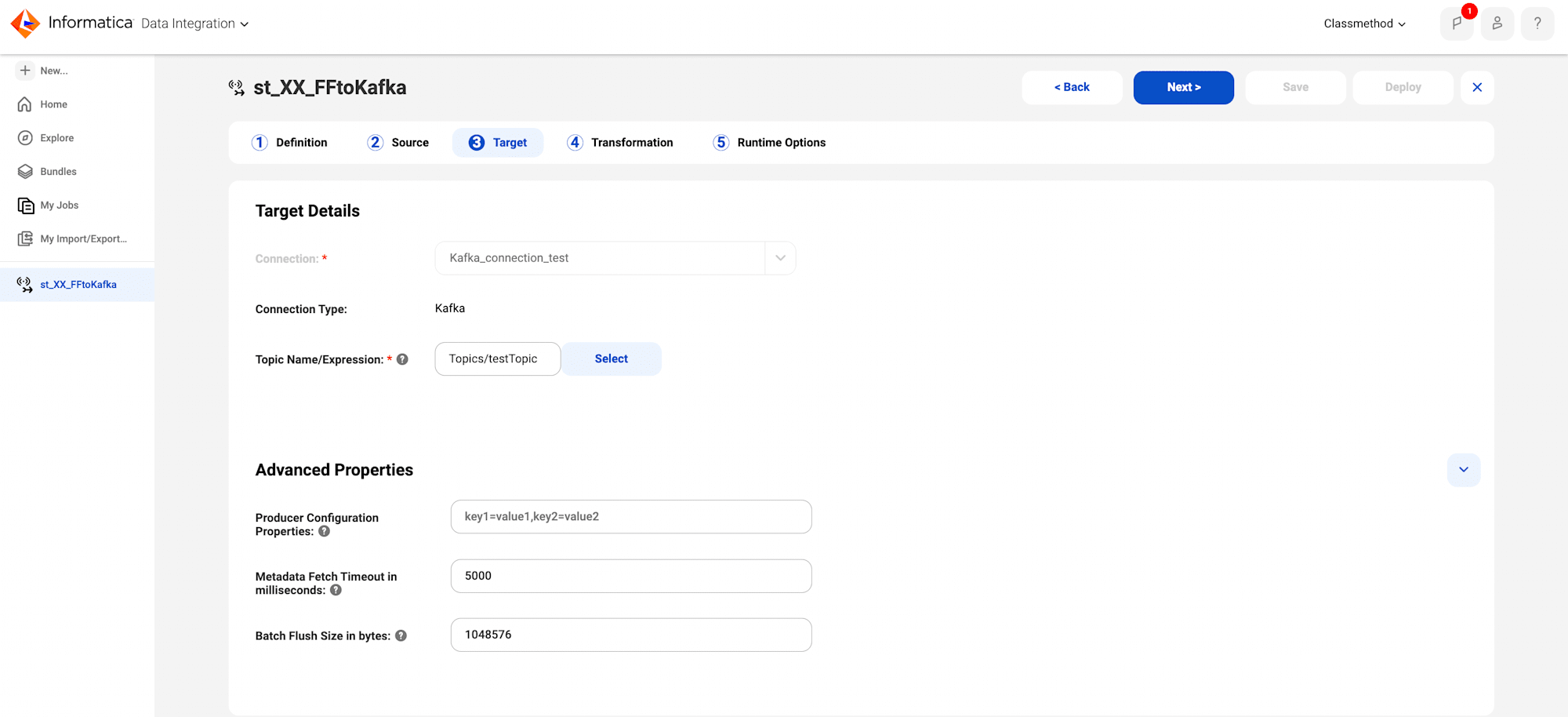

- Select kafka connection and topic.

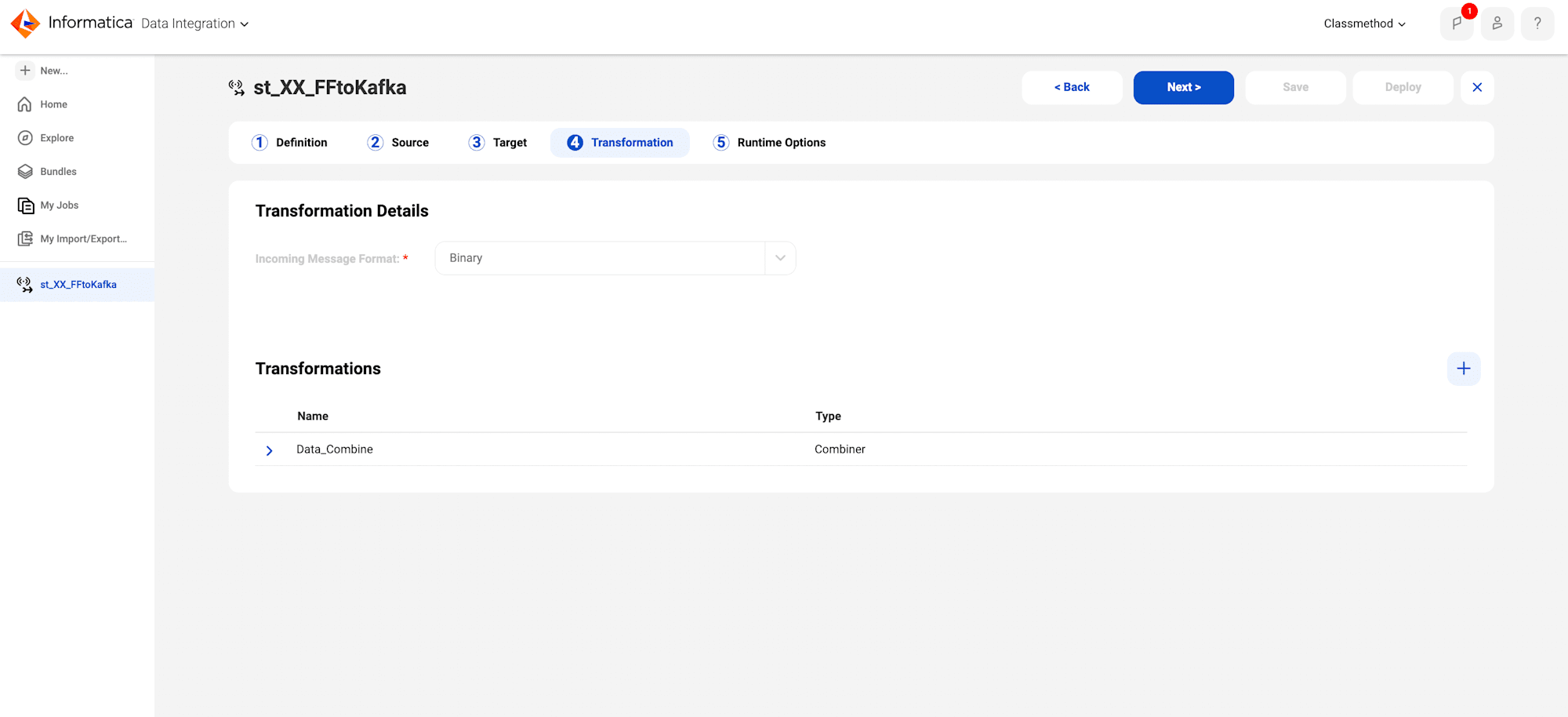

- In the New Transformation window, select Combiner.

- Enter the transformation name as Data_Combine.

- Set the Minimum Number of Events as 2.

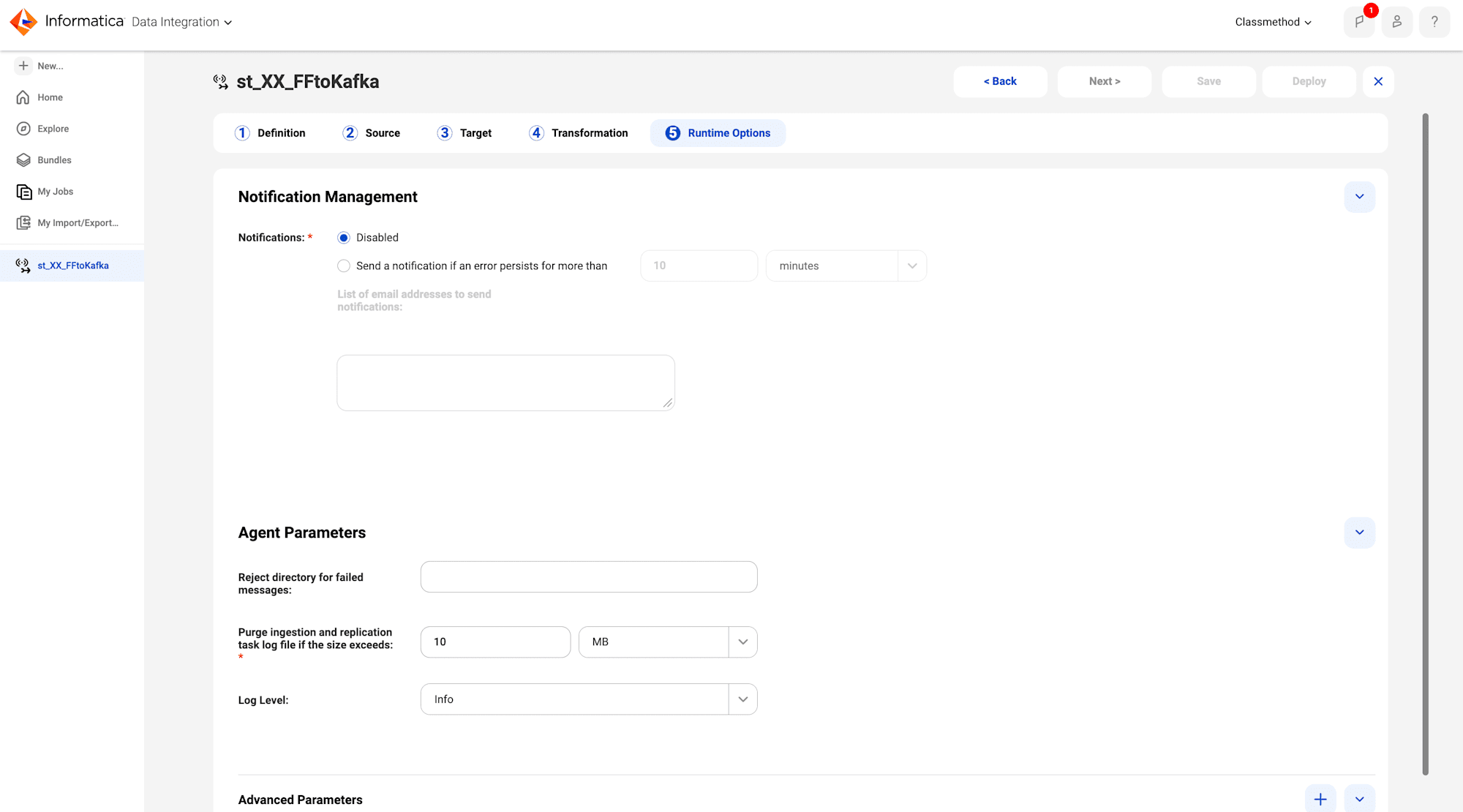

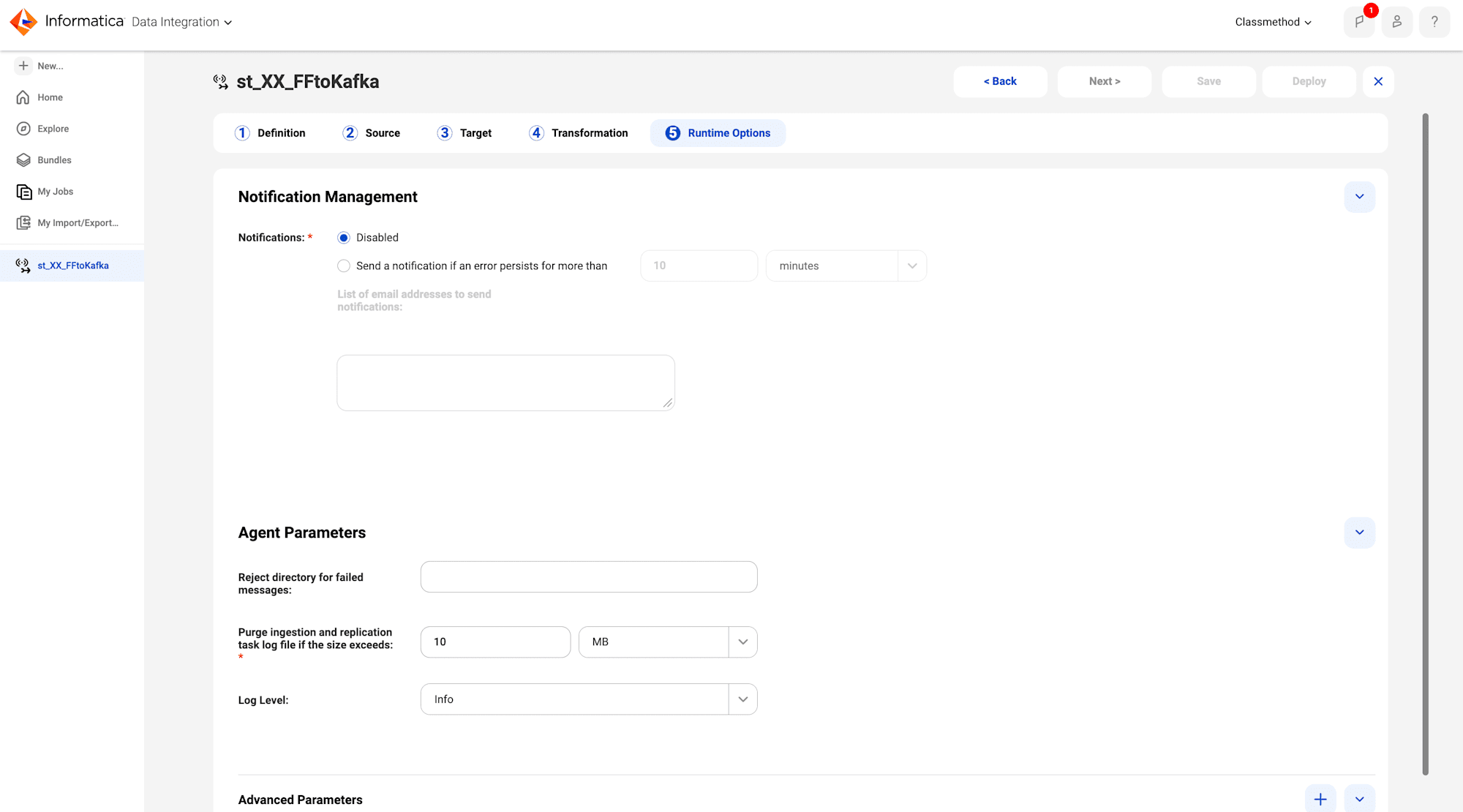

- In the Runtime Options step, you can set the email notification and other advanced settings for the task. For this experiment, retain the default settings.

- Click Save.

- To run the task as a job in IICS, you must deploy it on the secure agent. - Click Deploy.

Execution

- To run the task as a job in IDMC, you must deploy it on the secure agent. - Click Deploy.

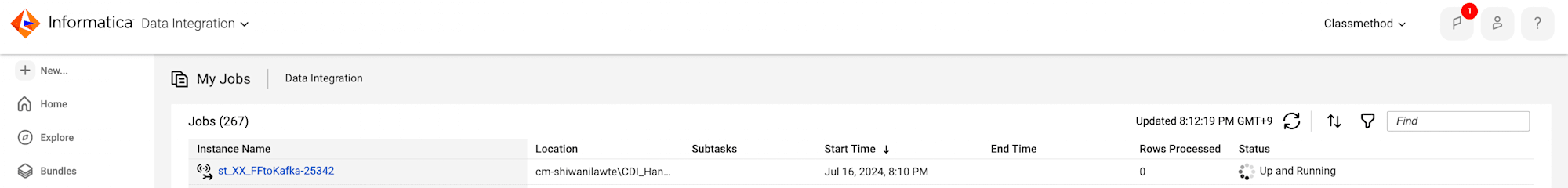

- To monitor the deploying status of the task, click My Jobs.

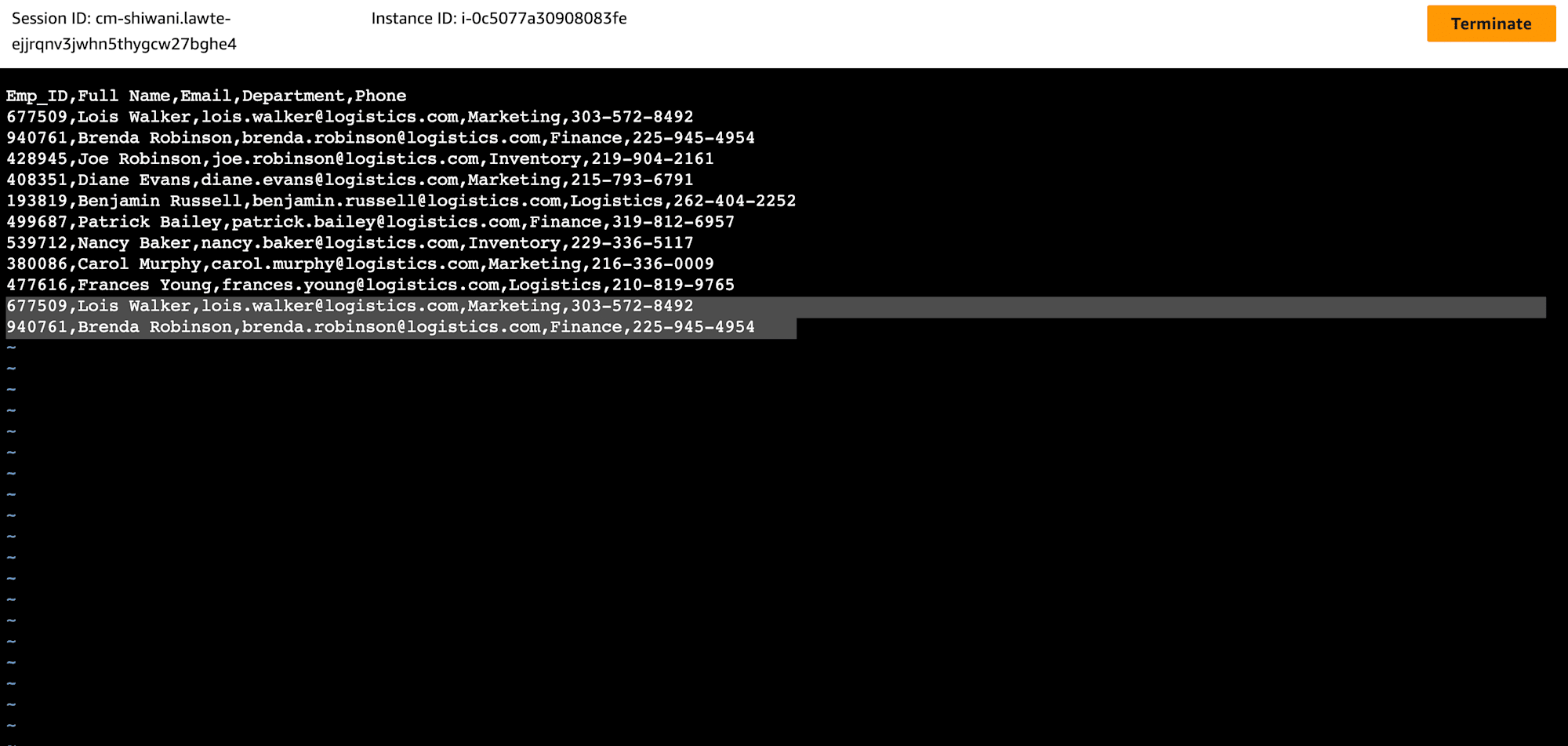

- Open the Employee_Streaming.csv file from secure agent instance.

- Copy the first two rows of data from the file and paste it at the end of the same file. Save the file in the same location.

Results

- Navigate to My Jobs page of Data Integration service.

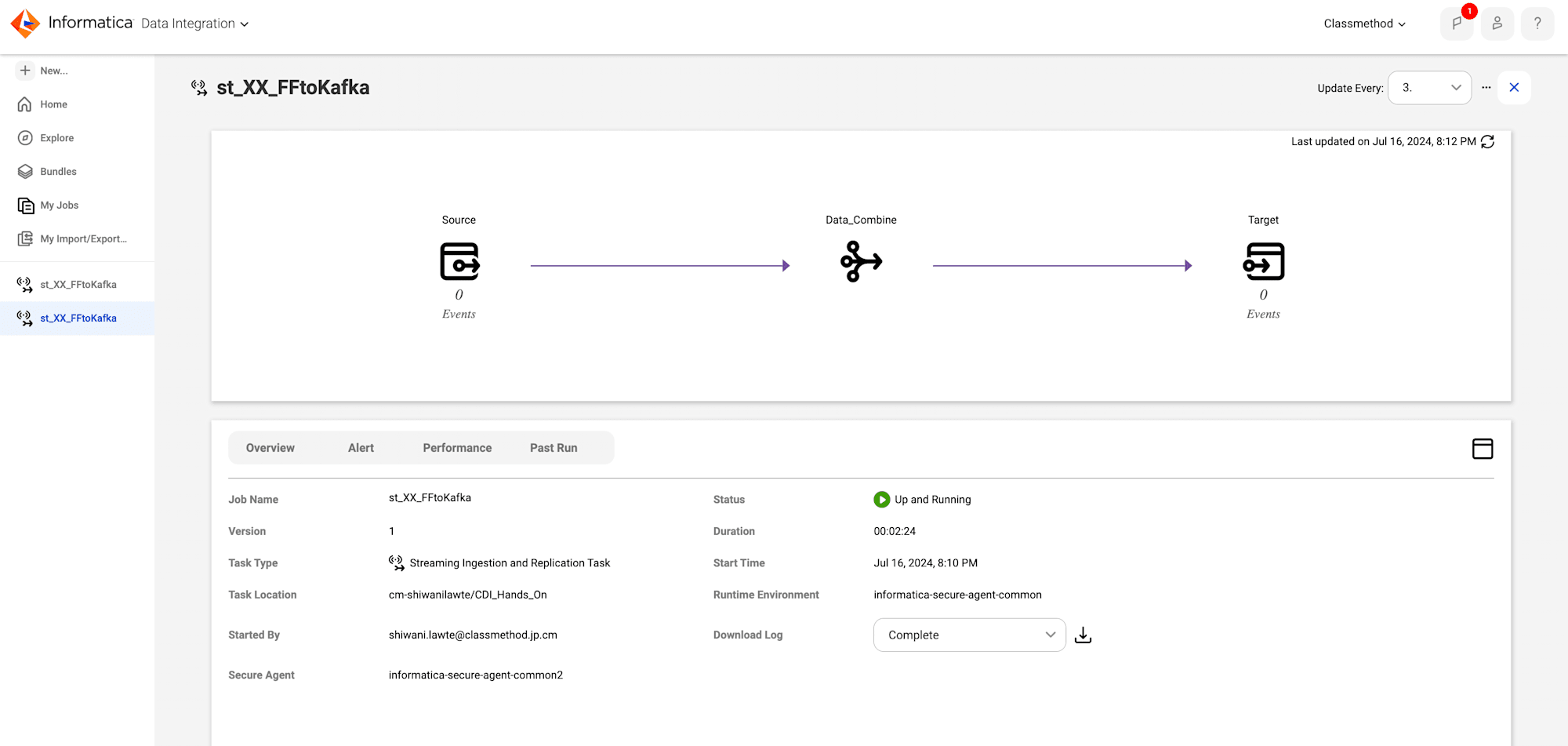

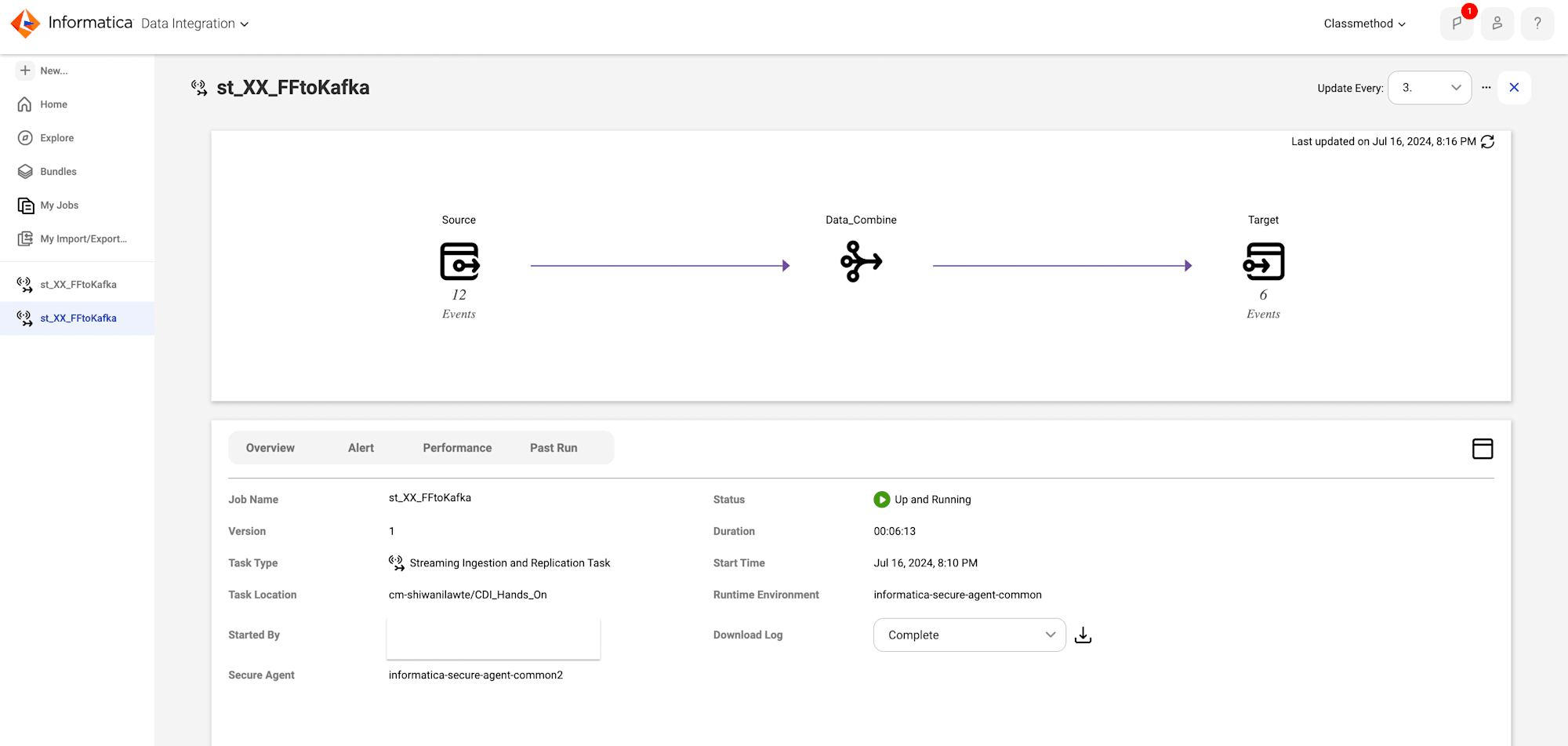

- To check the task status, click on the Streaming Ingestion task Instance Name.

- Verify the number of events in the Source and Target.

- Combiner transformation has been used to combine 2 events as 1 event so the number of events in Target is half the number of events in the Source.

Conclusion

I have successfully developed and tested a Streaming Ingestion Task in IDMC.

The Streaming Ingestion Task can read real-time data from a flat-file source and write it to a Kafka topic in realtime.